|

|

|

|

|

|

|||

|

|

|

|

|

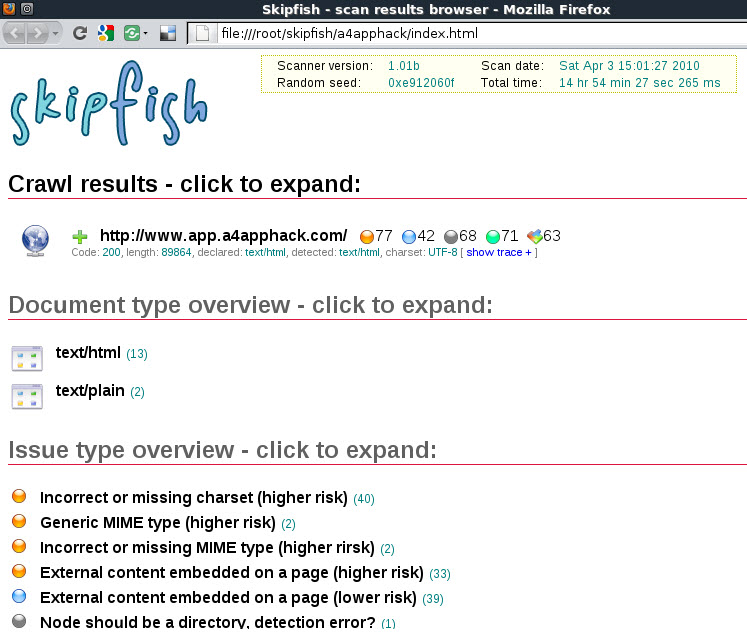

Skipfish Scanner

Skipfish is an active web application security reconnaissance tool. It prepares an interactive sitemap (blow image) for the targeted site by carrying out a recursive crawl and dictionary-based probes. The resulting map is then annotated with the output from a number of active (but hopefully non-disruptive) security checks. The final report generated by the tool is meant to serve as a foundation for professional web application security assessments.

A number of commercial and open source tools with analogous functionality is readily available (e.g., Nikto, Nessus); stick to the one that suits you best. That said, skipfish tries to address some of the common problems associated with web security scanners. Specific advantages include:

That said, skipfish is not a silver bullet, and may be unsuitable for certain purposes. For example, it does not satisfy most of the requirements outlined in WASC Web Application Security Scanner Evaluation Criteria (some of them on purpose, some out of necessity); and unlike most other projects of this type, it does not come with an extensive database of known vulnerabilities for banner-type checks. Implemented Tests

A rough list of the security checks offered by the tool is outlined below:

More information is available from the website at http://code.google.com/p/skipfish/wiki/SkipfishDoc

Installation

Note: - This is based on a Fedora Core Install

Download skipfish from: http://code.google.com/p/skipfish/

Pre-requisites:

Install openssl from source this is available from: http://www.openssl.org/source/ Install libidn from source, this is available from: http://ftp.gnu.org/gnu/libidn/

Note: - RPM installs of these utilities will not work

Amend the skipfish Makefile to include the last three extra directories that openssl and libidn installs files on Fedore Core to otherwise skipfish may not find associated pre-requisite files that are required for the installation:

------abridged----- CFLAGS_GEN = -Wall -funsigned-char -g -ggdb -I/usr/local/include/ \ -I/opt/local/include/ -I/usr/local/ssl/include/ \ -I/usr/lib/ -I/usr/local/ssl/lib/ $(CFLAGS) -D_FORTIFY_SOURCE=0 ------abridged-----

Possible errors encountered:

Missing lcrypto: /usr/bin/ld: cannot find -lcrypto

Correct with: ln /usr/lib/libcrypto.so.10 /usr/lib/libcrypto.so

Missing lssl: /usr/bin/ld: cannot find -lssl

Correct with: ln /usr/lib/libssl.so.10 /usr/lib/libssl.so

Copy the desired dictionary from the dictionaries folder to a new file called skipfish.wl

Execution

Usage: ./skipfish [ options ... ] -o output_dir start_url [ start_url2 ... ]

Authentication and access options:

-A user:pass - use specified HTTP authentication credentials -F host:IP - pretend that 'host' resolves to 'IP' -C name=val - append a custom cookie to all requests -H name=val - append a custom HTTP header to all requests -b (i|f) - use headers consistent with MSIE / Firefox -N - do not accept any new cookies

Crawl scope options:

-d max_depth - maximum crawl tree depth (16) -c max_child - maximum children to index per node (1024) -r r_limit - max total number of requests to send (100000000) -p crawl% - node and link crawl probability (100%) -q hex - repeat probabilistic scan with given seed -I string - only follow URLs matching 'string' -X string - exclude URLs matching 'string' -S string - exclude pages containing 'string' -D domain - crawl cross-site links to another domain -B domain - trust, but do not crawl, another domain -O - do not submit any forms -P - do not parse HTML, etc, to find new links

Reporting options:

-o dir - write output to specified directory (required) -J - be less noisy about MIME / charset mismatches -M - log warnings about mixed content -E - log all HTTP/1.0 / HTTP/1.1 caching intent mismatches -U - log all external URLs and e-mails seen -Q - completely suppress duplicate nodes in reports -u - be quiet, disable realtime progress stats

Dictionary management options:

-W wordlist - load an alternative wordlist (skipfish.wl) -L - do not auto-learn new keywords for the site -V - do not update wordlist based on scan results -Y - do not fuzz extensions in directory brute-force -R age - purge words hit more than 'age' scans ago -T name=val - add new form auto-fill rule -G max_guess - maximum number of keyword guesses to keep (256) Performance settings: -g max_conn - max simultaneous TCP connections, global (50) -m host_conn - max simultaneous connections, per target IP (10) -f max_fail - max number of consecutive HTTP errors (100) -t req_tmout - total request response timeout (20 s) -w rw_tmout - individual network I/O timeout (10 s) -i idle_tmout - timeout on idle HTTP connections (10 s) -s s_limit - response size limit (200000 B)

Example syntax: ./skipfish -o output_dir http://www.target.com/ ./skipfish -D target2.com -o output_dir http://target1.com/ ./skipfish -D .target.com -o output-dir http://test1.target.com/ - Domain wildcarding ./skipfish -A user:pass -o output_dir http://target1.com/

|

IT Security News: more........

Pen Testing Framework:

Information:

|

| © VulnerabilityAssessment.co.uk 13 June 2010 |

|